What the new EU Artificial Intelligence Act means for drones, UTM and eVTOLs

By Philip Butterworth-Hayes

On January 24 this year the European Commission launched a package of measures to support European companies in the development of trustworthy artificial intelligence (AI) “that respects EU values and rules”. According to a Commission press release: “This follows the political agreement reached in December 2023 on the EU AI Act – the world’s first comprehensive law on Artificial Intelligence – which will support the development, deployment and take-up of trustworthy AI in the EU.”

Among the AI initiatives announced by the Commission is the setting up of AI “factories” which include acquiring, upgrading and operating AI-dedicated supercomputers to enable fast machine learning and training of large General Purpose AI (GPAI) models; new financial support from the Commission through Horizon Europe and the Digital Europe programme dedicated to generative AI. This package will generate an additional overall public and private investment of around Euro 4 billion until 2027; and the launch of ‘GenAI4EU’ initiative, which aims to support the development of novel use cases and emerging applications in Europe’s 14 industrial ecosystems, as well as the public sector. “Application areas include robotics, health, biotech, manufacturing, mobility, climate and virtual worlds.”

European Union researchers developing AI and machine learning (ML) tools to support advanced air mobility (AAM) and drone operations in complex airspaces will therefore have new sources of funding and access to more capable data processing resources.

AI is a fundamental prerequisite for UTM systems, according to the latest version of the European Aviation Safety Agency’s Artificial Intelligence Roadmap (version 2.0).

“The integration of manned and unmanned aircraft, while ensuring safe sharing of the airspace between airspace users, and ultimately the implementation of advanced U-space services will only be possible with high levels of automation and use of disruptive technologies like AI/ML. The early implementation of AI/ML solutions will be essential to widely enable complex drone operations in environments which are fast-evolving and where stringent requirements apply, such as urban areas or congested control tower regions…. AI/ML solutions will play a crucial role in enabling safe conduct of the drone operations also in case of a contingency/ emergency situation — for instance, in detecting obstacles (e.g. cranes), detecting or predicting icing conditions, or determining the risk on the ground (e.g. presence of public on a pre-planned landing site).”

EASA highlights three areas in which AI/ML will play a crucial role: detect and avoid (DAA), adaptive deconfliction (by dynamically predicting the risk of encountering an intruder along the flight path and adjusting in advance the drones’ trajectory to ensure continuous separation in space and time) and autonomous localisation/navigation (without GPS).

But. And there is always a “but” in these articles.

There are several areas of AI development which worry EASA and aviation regulators around the world and which will need far more pro-active regulatory oversight than merely highlighting the challenges.

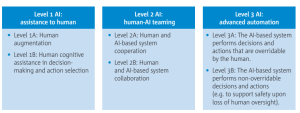

The most complex of these involves the regulation and safety assurance of advanced automation, where the AI-based system performs non-overridable decisions and actions – or level 3B in EASA parlance – especially when the technology used does not rely on rules-based algorithms.

Source: EASA

“Real-time learning in operations is a parameter that is expected to introduce a great deal of complexity in the capability to provide assurance on the ever-changing software,” says EASA. “This is incompatible with current certification processes and would require large changes in the current regulations and guidance. At this stage, this is considered a complex issue that requires the introduction of a strong limitation for safety-related systems in the aviation domain.”

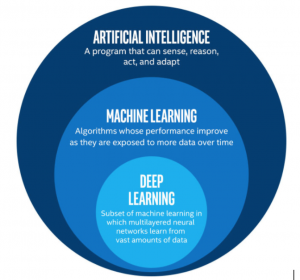

There is a difference between applying rules-based logic to AI safety-of-life services and machine learning calculations.

According to a working paper “Artificial Intelligence and Machine Learning in ATC” presented at a recent International Federation of Air Traffic Controllers’ Associations (ATCA) conference:

“Artificial intelligence is rule-based: build a computer program with rules, algorithms and knowledge explicitly embedded, based on previously acquired knowledge and high-speed computation of options. Machine learning is data-based: learn bottom-up, from data, by observing and detecting patterns and statistical regularities in historical data to anticipate future events. Machine learning is based on data and input from artificial networks, designed to mimic the functions and the structure of human neurons, as the perfect basic structure of interconnectivity and functionality. Deep learning is a concatenation of mathematical equations and can handle (big amounts of) unstructured data to discover patterns by itself.”

Source: WikiIFATCA

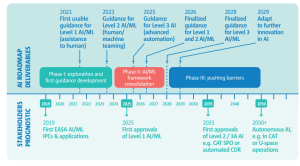

Regulating rules-based AI systems where the outcomes are a result of ML and deep learning present aviation regulators and standards bodies with a huge set of challenges – not the least being the recruitment of AI specialists with the knowledge to provide effective guidance on the subject. Not surprisingly, EASA has built in a large safety buffer to allow for certification of these new technology, 2050 plus for autonomous AI operations which can cope with sudden turbulent events or UTM systems which are entirely autonomous.

Source: EASA

Are these safety buffers realistic? China’s EHang has already started commercial flights with the EH216 autonomous air vehicle and its Unmanned Aircraft Cloud System (UACS) – which integrates airspace management, aircraft control system, flight plans and operations enabling “cluster management of multiple aircrafts within the same airspace” – was certified by the Civil Aviation Administration of China (CAAC) back in August 2023.

This puts China on course to develop fully autonomous air traffic management and UTM systems some twenty years ahead of Europe.

A 2023 research paper by Bryce Tech Advanced Air Mobility: An Assessment of a Coming Revolution in Air Transportation and Logistics for the UK Government suggests that UTM and ATM autonomous technology research to support AAM operations has already reached between technology readiness level four (technology validated in lab) and readiness level six (technology demonstrated in industrially relevant environment in the case of key enabling technologies).

Which all seems to suggest that if the EASA timetable for full autonomy is to be brought forward to allow European industry to become more competitive in the AAM and global UTM markets, aviation researchers in the continent might need to avoid some of the more esoteric ML models for aircraft and airspace management in exploiting the new resources made available to them in the EU’s new AI Act. Instead, they should concentrate on more traditional rule-based AI systems where the outcomes can be logically explained.